The AI Impact Report: Ground truth on how AI is changing engineering

The AI Impact Report: Ground truth on how AI is changing engineering

Span Team

•

Jan 7, 2026

Last year, AI coding went mainstream. Most engineering teams crossed the adoption threshold, experimented with new tools, and made sure they were not falling behind.

As we head into 2026, the focus has changed. If 2025 was about AI adoption, 2026 will be about leverage.

Engineering leaders are being asked to explain AI’s real impact on how their software is built, but most are still stitching together surveys, anecdotes, and fragmented telemetry.

Introducing the all-new AI Impact Report

Today, we’re introducing the AI Impact Report inside Span.

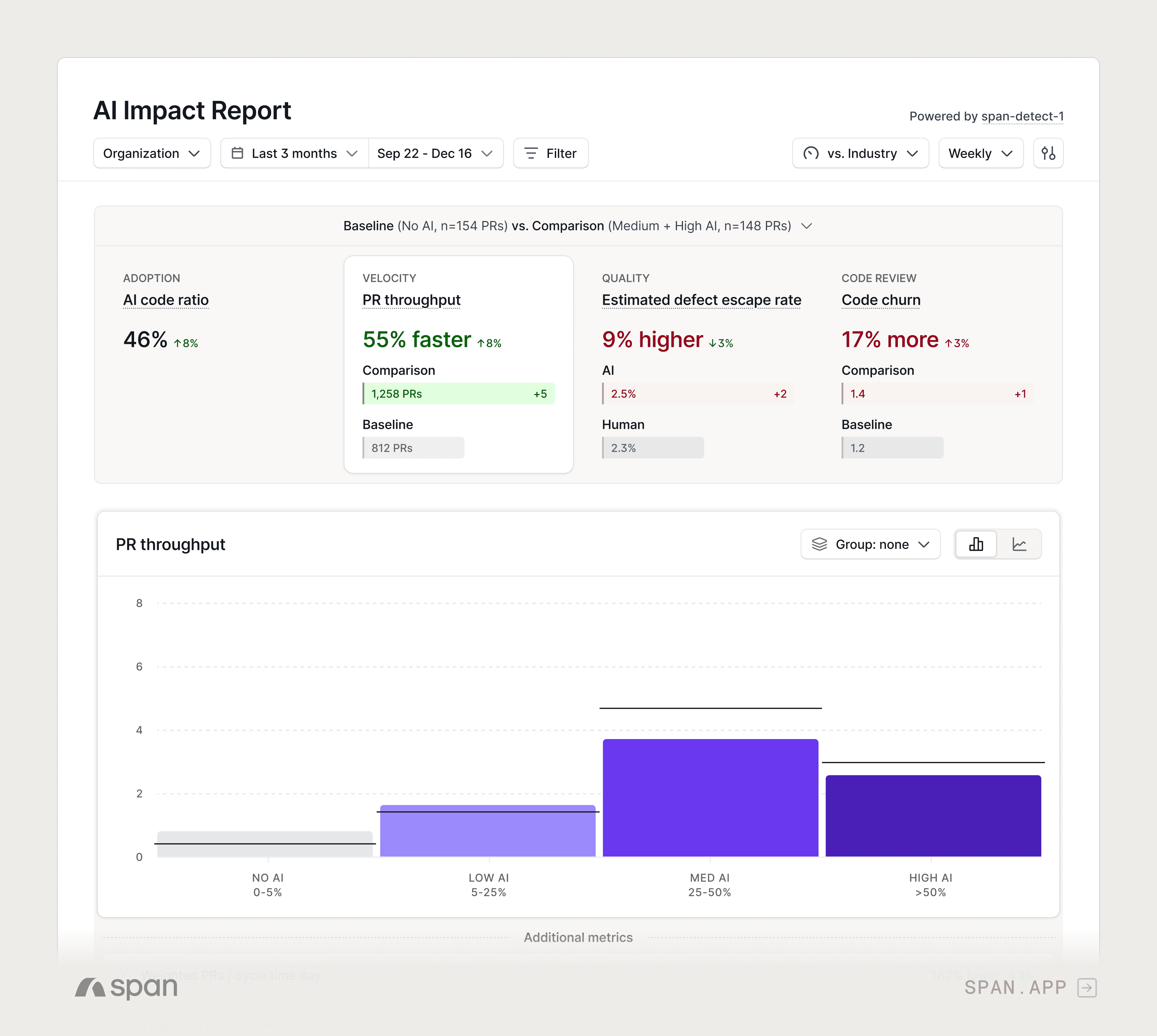

The AI Impact Report brings together AI usage and engineering outcomes into a single, connected view. It shows how AI-generated code is affecting delivery speed, code review effort, and code quality across your organization.

"With Span’s AI Impact Report, we finally have a way to move beyond gut instinct and rely on real evidence. It’s helping us see what truly drives results, and how we can keep leveling up as an organization."

Henrique Boregio, Director of Engineering at ClassPass

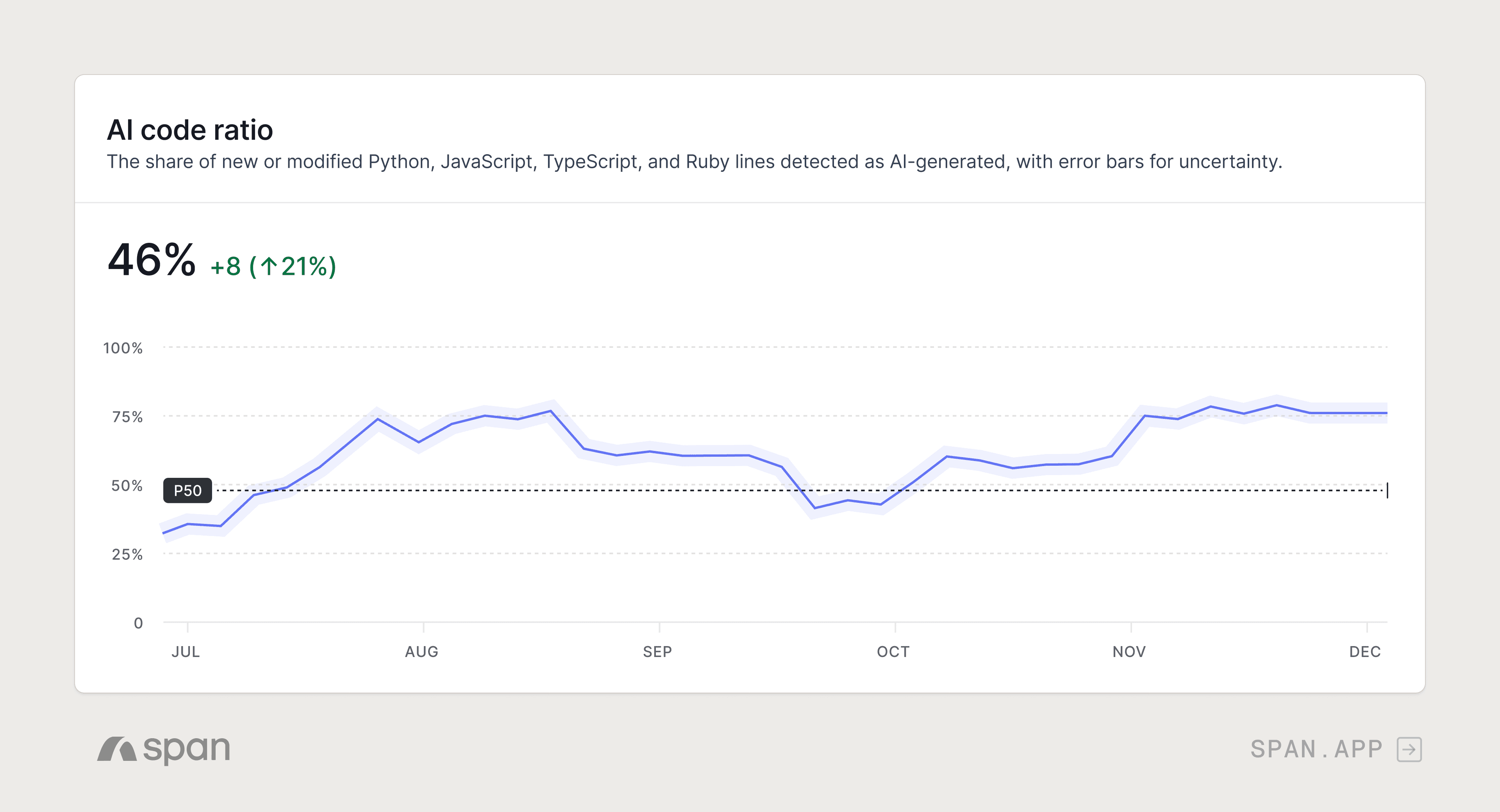

This builds on the foundation we laid in September with our AI code detection model, span-detect-1, which made Span the only platform measuring AI code in production with 95% accuracy across all AI tools. Measurement was just the start. The AI Impact Report connects that signal directly to the outcomes leaders care about.

Unlike other approaches in this space, the AI Impact Report does not rely on surveys, self-reported usage, or incomplete telemetry from AI tools. It measures AI where it actually shows up: in your codebase, alongside real engineering activity.

The AI Impact Report turns AI usage data into a clear view of how delivery, review, and quality change over time.

Measure AI adoption in production

Track AI-generated code over time and understand how adoption is changing across the organization. This gives leaders board-ready reporting grounded in production data, with confidence in what’s being measured.

Quantify impact on delivery and throughput

AI is widely believed to make teams faster. The AI Impact Report helps quantify how much and where. You can compare PR throughput across developers using no AI, low AI, medium AI, and high AI, and see how shipping patterns change as AI usage increases.

Understand impact on review and rework

Speed is only part of the picture. Leaders also need to understand what AI changes downstream.

The AI Impact Report shows how AI-generated code affects review cycles and rework. You can compare AI vs non-AI PRs and slice by team or type of work to see where AI is creating leverage, and where it may be introducing friction.

Early data we're seeing across teams

From early data across teams using the AI Impact Report, we’re beginning to see measurable differences between AI and non-AI work.

Developers using AI ship around

67%more pull requests per week on averagePRs with AI-generated code spend around

30%more time in reworkAI-generated PRs require about

10%more review cycles(1.51 vs. 1.38)

These are aggregate, directional signals that vary by team and context. Applied to your own organization, the AI Impact Report helps teams validate what they're already observing, and replace anecdote with evidence drawn from real data.

Monitor code quality over time

Understanding how AI affects code quality is critical as usage scales. The AI Impact Report includes early quality signals focused on post-merge outcomes, including estimated defect escape rates up to 90 days after merge.

This capability is currently in private beta, and we are working closely with customers to refine the methodology. Reach out if you’re interested in testing this with your team.

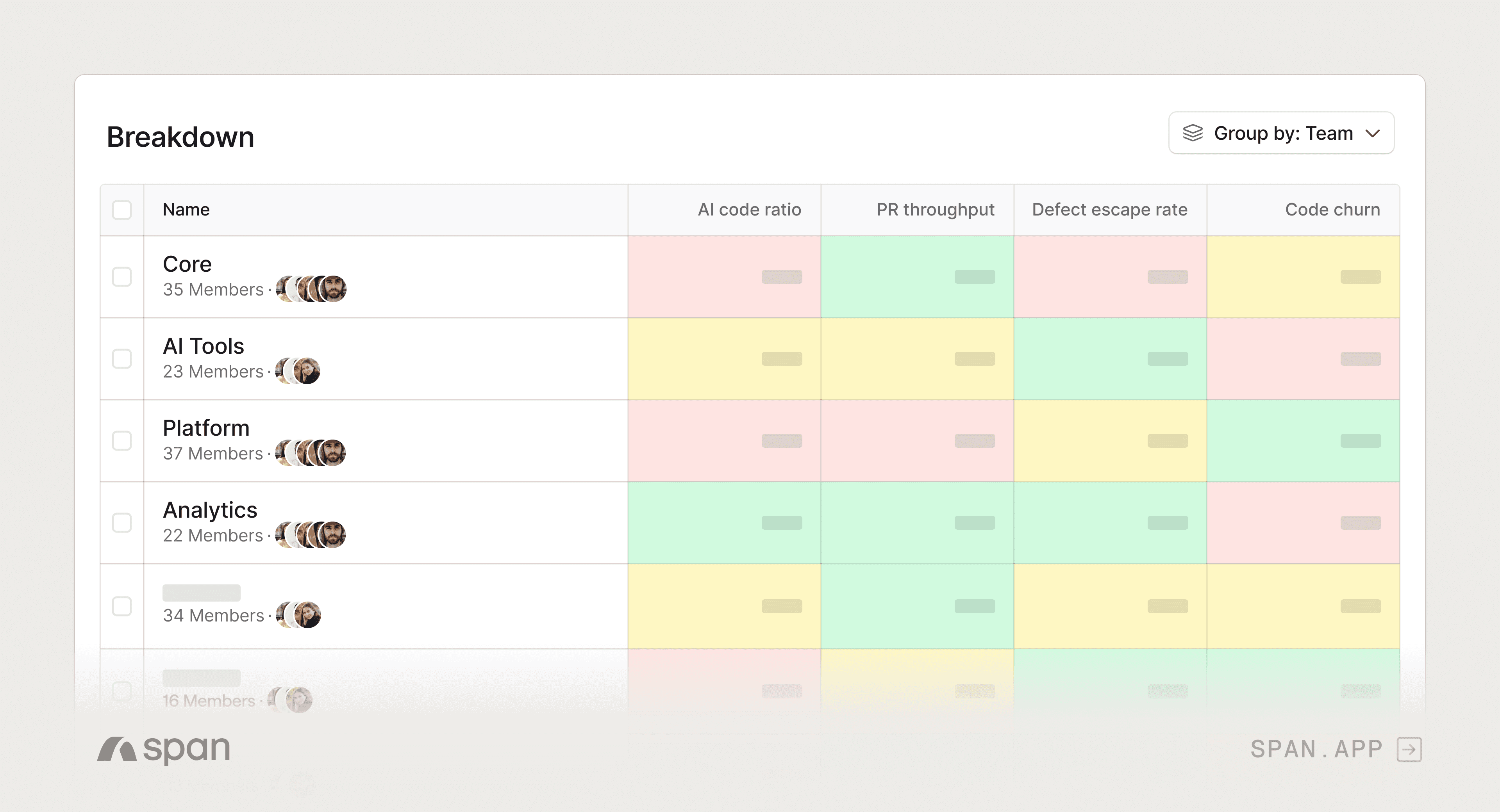

Build AI proficiency across the organization

Beyond metrics, the AI Impact Report helps leaders understand where AI is being used most effectively. By slicing results by team, individual, or type of work, leaders can identify AI power users, understand what good looks like, and intentionally scale those behaviors across the organization.

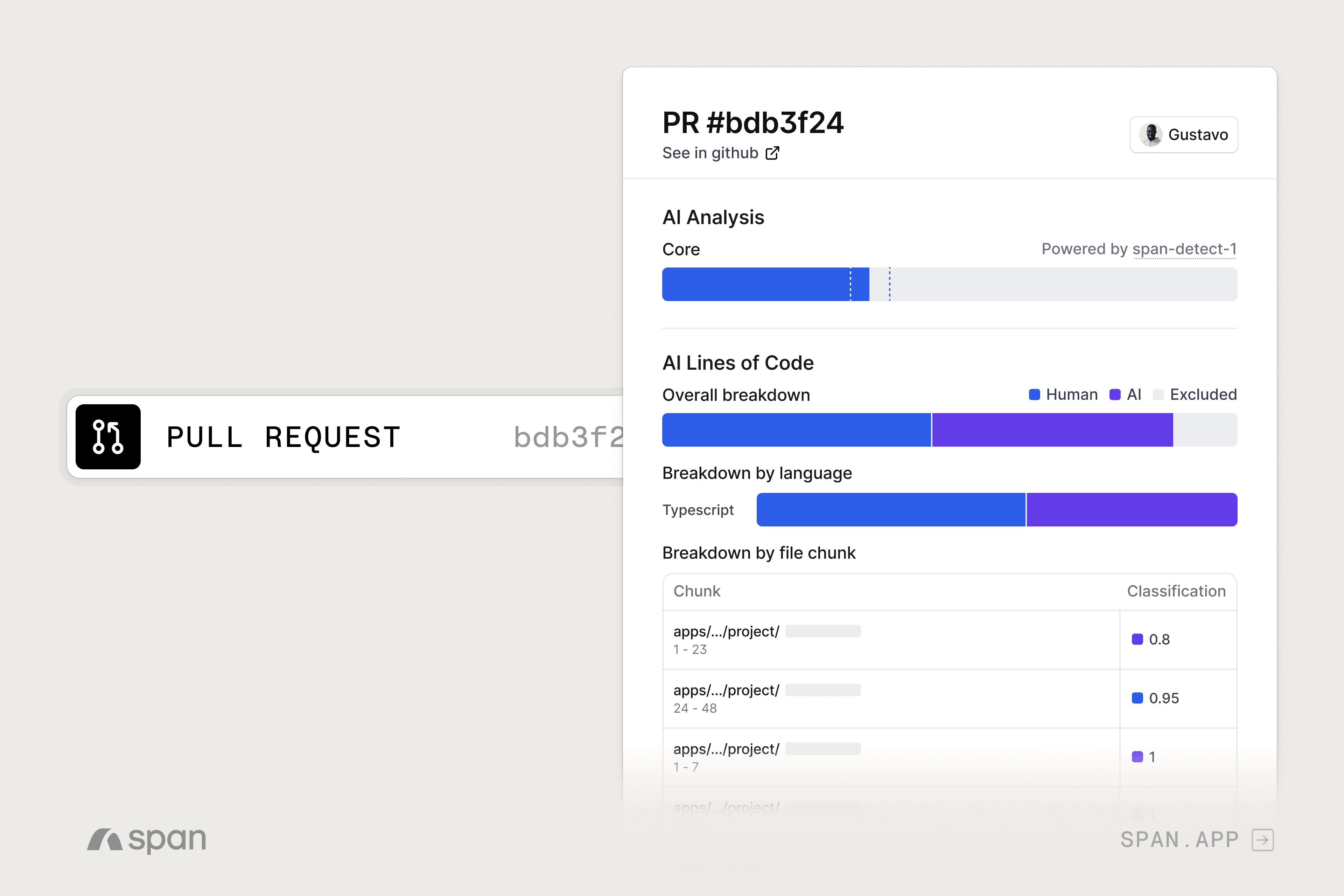

Traceable to real code

Every insight in the AI Impact Report is traceable back to real work. Leaders can drill down from trends to individual pull requests, verify AI detection at the code chunk level, and trust the data behind the decisions they’re making.

Turning AI adoption into leverage

AI adoption was the necessary first step. But adoption alone does not create advantage.

The AI Impact Report is built for the next phase: helping engineering leaders understand what AI is actually changing inside their teams, where it creates leverage, and where it introduces new tradeoffs. With clear, source-level evidence, leaders can move beyond intuition and make deliberate choices about how AI is used across the software development lifecycle.

That is how AI adoption turns into real, sustained leverage.

Everything you need to unlock engineering excellence

Everything you need to unlock engineering excellence